TestNG IRetryAnalyzer: How to Implement and Utilize

TestNG IRetryAnalyzer is a dependable solution to easily rerun failed tests.

In the modern agile world, where automated testing is a crucial part of delivering software faster, with developers checking in smaller pieces of code more frequently, and continuous integration builds running automated tests, the need for quick and accurate reporting has never been more critical.

While reporting automated test results may sound simple, many teams struggle, specifically in UI automation, dealing with the false positive test result. Particularly at larger organizations, where several product teams share environments, data, and have service interdependencies, false positive test results tend to appear intermittently, and running the exact same test again might result in a passed test. While the test failure may be valid at that moment in time, you usually don’t want to create a bug for something caused by a deploy to an environment, or an unreproducible UI render, and you definitely don’t want to be holding up a release because you can’t determine if the new feature caused the failures or not.

Luckily, many testing frameworks provide built in solutions to solve this common obstacle. One I’ve found to be extremely robust and dependable is testNG’s IRetryAnalyser, which allows you to rerun a failed test method a set amount of times before declaring it as failed. So, without further ado, let’s dive into how we can actually implement TestNG IRetryAnalyzer.

The Interface:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

package org.testng; /** * Interface to implement to be able to have a chance to retry a failed test. * * @author tocman@gmail.com (Jeremie Lenfant-Engelmann) * */ public interface IRetryAnalyzer { /** * Returns true if the test method has to be retried, false otherwise. * * @param result The result of the test method that just ran. * @return true if the test method has to be retried, false otherwise. */ public boolean retry(ITestResult result); } |

First let’s look at the interface, with only one method, retry will be called if a test method fails, and allows you to get any test details from the ITestResult input argument. This method implementation should return true if you want to re-execute a failed test, and false if you do not want to re-execute. The implementation of this interface determines how many times to retry a failed test based on a fixed counter, which you can see from the below example is the maxRetryCount set at 2 retries.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.testng.IRetryAnalyzer; import org.testng.ITestResult; public class Retry implements IRetryAnalyzer{ private static final Logger log = LoggerFactory.getLogger(Retry.class); private int retryCount = 0; private int maxRetryCount = 2; public String getResultStatusName(int status) { String resultName = null; if (status == 1) resultName = "SUCCESS"; if (status == 2) resultName = "FAILURE"; if (status == 3) resultName = "SKIP"; return resultName; } /** * Below method returns 'true' if the test method has to be retried else * 'false' and it takes the 'Result' as parameter of the test method that * just ran * * @see org.testng.IRetryAnalyzer#retry(org.testng.ITestResult) */ @Override public boolean retry(ITestResult result) { if (!result.isSuccess()) { if (retryCount < maxRetryCount) { log.info("Retrying test " + result.getName() + " with status " + "'" + getResultStatusName(result.getStatus()) + "'" + " for the " + (retryCount + 1) + " time(s)."); result.setStatus(ITestResult.SKIP); retryCount++; return true; }else { result.setStatus(ITestResult.FAILURE); } } else { result.setStatus(ITestResult.SUCCESS); } return false; } } |

How to Include IRetryAnalyzer in your tests

Now, let’s see how we can actually use the retryAnalyzer in our tests. If you want to quickly see how it works, you can add the retryAnalyzer value in your @Test annotation like below:

1 2 3 4 5 |

@Test(retryAnalyzer=Retry.class) public void test(){ Assert.assertEquals(1,0); } |

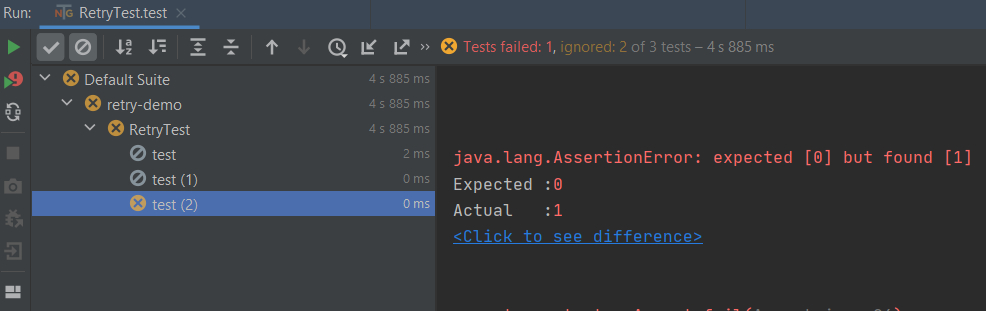

And if we run this test, we will get the following results:

You can see the test ran 3 times, and was not marked as failed until after the two retries. While adding the retryAnalyzer at the individual test level is a decent way to quickly see how the analyzer works, it’s not a great long term solution as it overcrowds your tests annotations, and is just not necessary. That is why, I would suggest implementing the retryAnalyzer at the test suite level, in your @BeforeSuite method. As you can see below, adding to your @BeforeSuite is simple, using TestNG’s ITestContent to apply the analyzer to all test methods via getAllTestMethods.

1 2 3 4 5 6 |

@BeforeSuite(alwaysRun = true) public void setupSuite(ITestContext context) { for (ITestNGMethod method : context.getAllTestMethods()) { method.setRetryAnalyzer(new Retry()); } } |

After applying to your @BeforeSuite, you can remove the retryAnalyzer annotation from your individual tests like below:

1 2 3 4 5 |

@Test()

public void test(){

Assert.assertEquals(1,0);

}

|

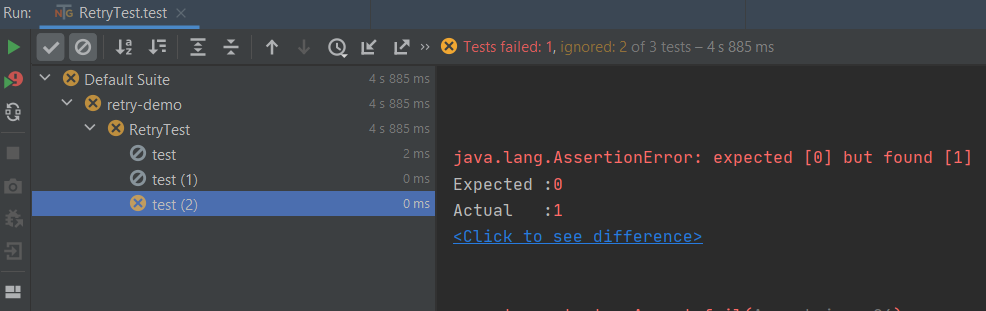

And after running again, we will get the same test results:

The TestNG IRetryAnalyzer Difference

This simple, but impactful utilization of testNG’s IRetryAnalyzer is a fantastic way to show increased value from test automation. Implementing the retryAnalyzer, specifically at the test suite level, gives not only your testing team, but entire product team immediate returns.

As a tester, the first noticeable improvement will likely be the added accuracy to your test automation reporting. Long term, and most importantly, you will save your team, and organization money by not wasting time trying to debug inaccurate test results, inevitably accelerating your team’s product lifecycle.

TestNG IRetryAnalyzer & Automation Reporting with tapQA

The test automation consultants at tapQA specialize in powerful, effective QA automation strategies and techniques. If you would like to learn more about TestNG and how IRetryAnalyzer can help your organization, we would love to hear from you.

Aaron Gibbons

Aaron Gibbons is a Senior Automation Engineer who has been with tap|QA for over 7 years. He has extensive experience in Quality Assurance and Test Automation. He has implemented quality solutions for many of tap|QA's clients.

Have a QA question?

Our team would love to help!