How To Manage Sensitive Healthcare Test Data

One of the most challenging components in testing software is in managing test data used throughout the SDLC.

Today’s software systems usually consolidate and aggregate data from multiple upstream sources, which can sometimes lead to tangled dependencies and unclear data relationships.

Development tasks to overcome these types of obstacles can be challenging. When it comes time to test this software, creating reliable and security-conscious test data can seem overwhelming and incredibly time-consuming.

But there is another way to obtain & gain trust in this data. And you might even have the engineers on staff to do the necessary work. But first, more context:

Why it's difficult to create test data

It is labor-intensive and expensive to manually enter data into your systems. As is always the case, testers need to actually spend time testing their applications. No one is willing or able to dedicate most of their work time for data entry.

The requirements for test data consumption can be very large. Depending on your needs, test data can be destroyed or altered during the testing session, meaning the account or data set is rendered obsolete. There can be a variation of this problem with scheduled data, in which new test accounts must be issued monthly, weekly, or on some other cycle.

When working with multiple linked systems or applications with many components, creating test data with all of the correct data dependencies can prove very challenging.

Healthcare applications provide their own unique test data challenges

Healthcare systems have two major additional requirements when compared to other types of applications. Typically the scope of data required is much larger, i.e. a single account requires many data points, often entered into separate applications. And data privacy regulations require extra care & considerations for all data used throughout a project, not just for user’s data in production.

It would be incredibly tempting, knowing how difficult it is to create usable healthcare test data, for someone to simply copy production data in a testing environment. But doing this would grossly violate several data privacy laws such as HIPAA.

How to import and sanitize production data

Here's an example from a recent project that could be applied to creating usable healthcare test data.

My team and I joined a software development program that was well underway. Our client was in the international tourism industry, so we regularly dealt with credit card data, SSN, passports, and immigration data - all data that needed to be protected as governed by several privacy laws.

The project’s goal was to deliver next-generation technology solutions to replace several late 90’s systems used to operate the business.

We were asked to take over the responsibility of verifying test data from a team that was disbanding. It was revealed to me early in the process that the standard practice was to import data from production, run it through an obfuscation script, and then investigate the new test data through exploratory testing, data sampling, and a small runbook for troubleshooting.

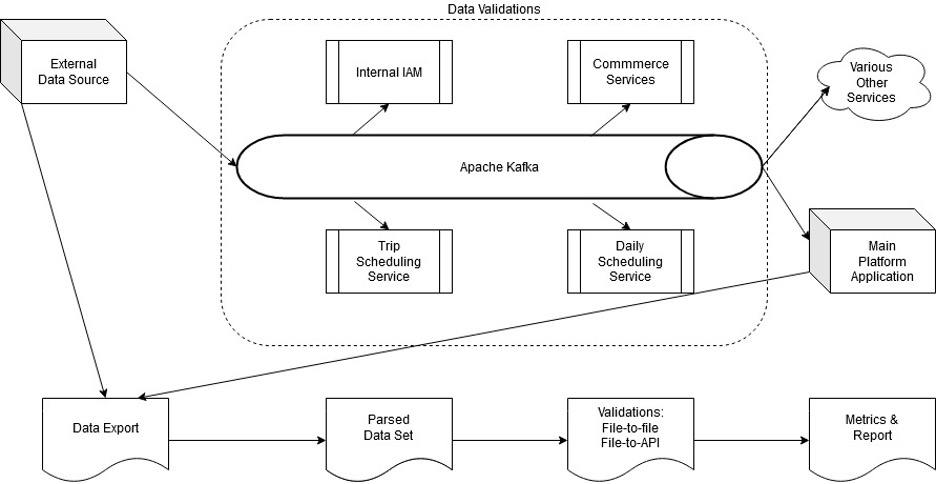

The tapQA team was not responsible for loading the data or the data obfuscation; the team who owned that system ran those scripts & then pushed all the sanitized data through Kafka. Our program used Kafka as a message bus, in both production and our testing environments, to publish any changes in state across our service-oriented architecture.

However, it was clear that several fields of sensitive data were altered by the scripts, to protect users’ privacy:

- Our software supported profile pictures. All production profile pictures were removed completely by the obfuscation.

- Names were changed; our system had the concept of travel parties and families, and the obfuscation did not change the declared family relationships.

- Any data fields that could be used to identify an account were scrubbed & populated with randomized data, like SSN, street address, and phone number. Email addresses were also highly randomized, but they all originated from a mail server specific to our testing environments. Most of these fields had hard requirements to be populated.

- Some fields were stripped by the obfuscation and left blank. These were usually optional but commonly used fields like passport number, or fields that were deemed too risky to process, like credit card data.

- Several fields that held demographic-type data (passport country, state of residence, age, etc.) were processed through with no changes. This was a very conscious decision to increase the likelihood of edge cases surfacing during testing.

After we learned more about the obfuscation and the software, we constructed a diagram similar to the top line of the image above. Our version got into several more exact details, as you would expect a real work artifact to. We also learned that the previous team had effectively dedicated a full 40 hour work week to their exploratory testing based validation approach.

tapQA's team of test automation engineers quickly began implementing a tool to follow the bottom line of the image above:

- Integrate into Apache Kafka to monitor test results in real-time, and to aid in troubleshooting,

- Read in data file exports from the external source and our system’s main application.

- Parse the data files into defined data sets.

- Iterate through the data sets, performing type checks, regex validations, and comparing the known imported values to the data available from system APIs as was appropriate.

- Deliver metrics on the validation tests, along with any suspect data points for further examination.

What had begun as a very time-consuming, high-level spot check of the ingested data was slowly transitioned to a robust ETL data pipeline. After a 3 month project, my team had reduced the validation time from 1 team member’s full work week to approximately 1 hour per week. We produced our own runbook, to be used in the event of issues or outages during the process.

And most importantly, we accelerated the delivery of features by delivering safe & useful test data to the teams we supported.

With healthcare data having such as patient information having many similarities to the data we were working with, a similar approach could be very effective when it comes to creating and managing healthcare test data. This leads to many more success stories for your QA and testing teams!

What is your healthcare test data management strategy?

tapQA has helped numerous organizations - particularly those in healthcare - with their test data management strategy. Our TDM consultants will help build the processes, methods and tools necessary to create usable and secure test data, eliminating all the risks associated with using production data.

Contact us today to learn how tapQA can bolster your test data management efforts!

Colin Sullivan

Colin Sullivan is an SDET with over 6 years of experience in implementing test automation & internal quality tools. He helps lead tapQA's programs for Design Thinking in QA, as well as AI / Machine Learning for Test Automation.

Have a QA question?

Our team would love to help!